Embedded Systems programming: Little Endian/Big Endian & TCP Sockets - 2020

endian or endianness refers to how bytes are ordered within computer memory.

If we want to represent a two-byte hex number, say c23g, we'll store it in two sequential bytes c2 followed by 3g. It seems that it's the right way of doing it. This number, stored with the big end first, is called big-endian. Unfortunately, however, a few computers, namely anything with an Intel or Intel-compatible processor, store the bytes reversed, so c23g would be stored in memory as the sequential bytes 3g followed by c2. This storage method is called little-endian.

| Little Endian | Big Endian | Bi-Endian |

|---|---|---|

| Intel x86 and x86-64 series ARM before version 3 6502 (including 65802, 65C816) Z80 (including Z180, eZ80 etc.) MCS-48, 8051 DEC Alpha Altera Nios II Atmel AVR SuperH VAX, PDP-11 |

Motorola 6800 and 68k series SPARC before 9 Xilinx Microblaze IBM POWER IBM System/360, System/370 IBMESA/390, z/Architecture. The PDP-10 |

ARM 3 and above PowerPC Alpha SPARC V9 MIPS PA-RISC SuperH SH-4 IA-64 |

Endian is important to know when reading or writing data structures, especially across networks so that different application can communicate with each other. Sometimes the endianness is hidden from the developer. Java uses a fixed endianness to store data regardless of the underlying platform's endianness, so data exchanges between two Java applications won't normally be affected by endianness. But other languages, C in particular don't specify an endianness for data storage, leaving the implementation free to choose the endianness that works best for the platform.

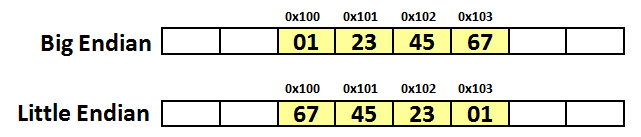

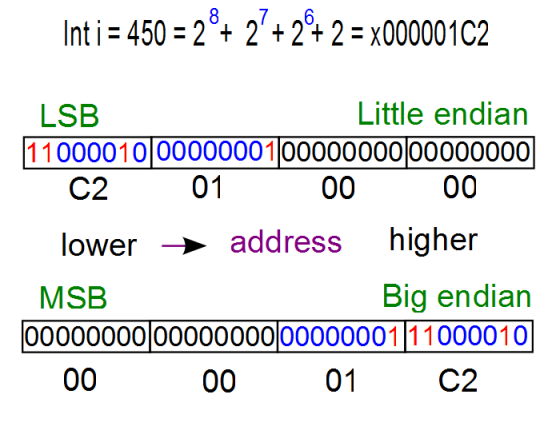

On big-endian (PowerPC, SPARC, and Internet), the 32-bit value x01234567 is stored as four bytes 0x01, 0x23, 0x45, 0x67, while on little-endian (Intel x86), it will be stored in reverse order:

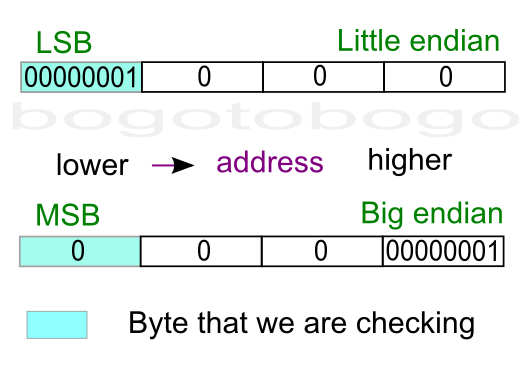

The picture below is a diagram for the example to check if a machine is little endian or big endian. The box shaded blue is the the byte we are checking because it's where a one-byte type (char) will be stored. The diagram shows where an integer (4-byte) value 1 is stored.

We can distinguish between the LSB and the MSB because the value 1 as an integer, has the value of 1 for LSN, and the value of 0 for MSB.

Note that a little endian machine will place the 1 (0001x, LSB) into the lowest memory address location. However, a big endian machine will put 0 (0001x, MSB) into the lowest memory address location.

Source A

#include <iostream>

using namespace std;

/* if the dereferenced pointer is 1, the machine is little-endian

otherwise the machine is big-endian */

int endian() {

int one = 1;

char *ptr;

ptr = (char *)&one;

return (*ptr);

}

int main()

{

if(endian())

cout << "little endian\n";

else

cout << "big endian\n";

}

Source B

#include <iostream>

using namespace std;

int endian() {

union {

int one;

char ch;

} endn;

endn.one = 1;

return endn.ch;

}

int main()

{

if(endian())

cout << "little endian\n";

else

cout << "big endian\n";

}

It is tempting to use bit operation for this problem. However, bit shift operator works on integer, not knowing the internal byte order, and that property prevents us from using the bit operators to determine byte order.

To check out the endian, we can just print out an integer (4 byte) to a 4-character output with the address of each character.

#include <stdio.h>

int main()

{

int a = 12345; // x00003039

char *ptr = (char*)(&a;);

for(int i = 0; i < sizeof(a); i++) {

printf("%p\t0x%.2x\n", ptr+i, *(ptr+i));

}

return 0;

}

Output:

0088F8D8 0x39 0088F8D9 0x30 0088F8DA 0x00 0088F8DB 0x00

As we see from the output, LSB (0x39) was written first at the lower address, which indicate my machine is little endian.

In this section, we need to address the issue of "address" communications between hosts and internet.

Network stacks and communication protocols must also define their endianness. Otherwise, two nodes of different endianness would be unable to communicate. This is a more substantial example of endianness affecting the embedded programmer. As it turns out, all of the protocol layers in the TCP/IP suite are defined to be big endian. In other words, any 16- or 32-bit value within the various layer headers must be sent and received with its most significant byte first.

Here is the code:

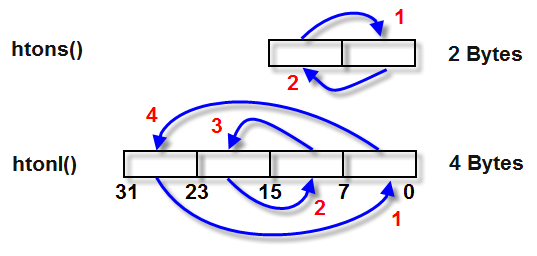

#if defined(BIG_ENDIAN) && !defined(LITTLE_ENDIAN)

#define htons(A) (A)

#define htonl(A) (A)

#define ntohs(A) (A)

#define ntohl(A) (A)

#elif defined(LITTLE_ENDIAN) && !defined(BIG_ENDIAN)

#define htons(A) ((((uint16_t)(A) & 0xff00) >> 8) | \

(((uint16_t)(A) & 0x00ff) << 8))

#define htonl(A) ((((uint32_t)(A) & 0xff000000) >> 24) | \

(((uint32_t)(A) & 0x00ff0000) >> 8) | \

(((uint32_t)(A) & 0x0000ff00) << 8) | \

(((uint32_t)(A) & 0x000000ff) << 24))

#define ntohs htons

#define ntohl htonl

#else

#error "Must define one of BIG_ENDIAN or LITTLE_ENDIAN"

#endif

The picture below describes the code of the byte shift operations on htons() and htonl().

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization