Matlab Tutorial : Video Processing 2 - Face detection and CamShift Tracking

This is based on Face Detection and Tracking Using CAMShift. I'm hoping I'll be able to add some values to the reference material.

This one failed. We'll see why.

For the details of the technical aspect, please visit my OpenCV page, Image object detection : Face detection using Haar Cascade Classifiers.

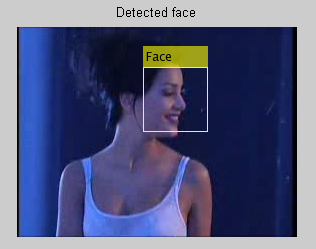

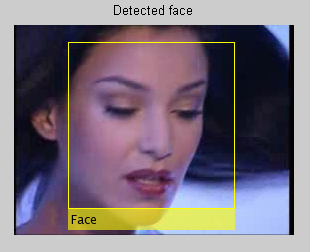

Before we start tracking a face, we should be able to detect it.

In Matlab, we the vision.CascadeObjectDetector() to detect the location of a face in a video frame acquired by a step() function. It uses Viola-Jones detection algorithm (cascade of scaled images) and a trained classification model for detection. By default, the detector is configured to detect faces, but it can be configured for other object types.

% Read a video frame and run the detector.

videoFileReader = vision.VideoFileReader('verona.wmv');

videoFrame = step(videoFileReader);

% Create a cascade detector object.

faceDetector = vision.CascadeObjectDetector();

bbox = step(faceDetector, videoFrame);

% Draw the returned bounding box around the detected face.

videoOut = insertObjectAnnotation(videoFrame,'rectangle',bbox,'Face');

figure, imshow(videoOut), title('Detected face');

The Matlab doc said "You can use the cascade object detector to track a face across successive video frames. However, when the face tilts or the person turns their head, you may lose tracking. This limitation is due to the type of trained classification model used for detection. To avoid this issue, and because performing face detection for every video frame is computationally intensive, this example uses a simple facial feature for tracking."

Actually, when I was working with OpenCV, I've seen the face detection failed for tilted or for the face not facing front. So, to be more general and good, as the doc said we need well trained detector.

We can locate a face in our video. Now what?

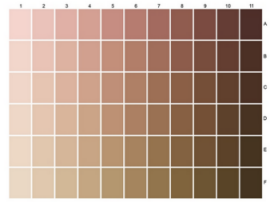

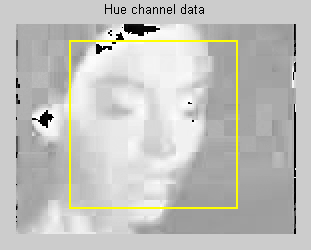

The next step is to identify a feature that will help us track the face. For example, we can use the shape, color, or anything. We need to find a feature that's unique to our target, features remain invariant even when the target moves.

In this example, we'll use skin tone as the feature to track following the Matlab doc. It said "the skin tone provides a good deal of contrast between the face and the background and does not change as the face rotates or moves."

When I googled it with "skin tone face detection", indeed, it's been widely used for face detection. In the same context, skin tone is also used in "face segmentation".

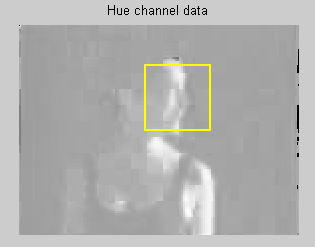

Here is the code responsible for the image above:

% Read a video frame and run the detector.

videoFileReader = vision.VideoFileReader('verona.wmv');

videoFrame = step(videoFileReader);

% Create a cascade detector object.

faceDetector = vision.CascadeObjectDetector();

bbox = step(faceDetector, videoFrame);

% Draw the returned bounding box around the detected face.

videoOut = insertObjectAnnotation(videoFrame,'rectangle',bbox,'Face');

figure, imshow(videoOut), title('Detected face');

% Get the skin tone information by extracting the Hue

% from the video frame and

% convert to the HSV color space.

[hueChannel,~,~] = rgb2hsv(videoFrame);

% Display the Hue Channel data

% and draw the bounding box around the face.

figure, imshow(hueChannel), title('Hue channel data');

rectangle('Position',bbox(1,:),'LineWidth',2,'EdgeColor',[1 1 0])

"With the skin tone selected as the feature to track, you can now use the vision.HistogramBasedTracker for tracking. The histogram based tracker uses the CAMShift algorithm, which provides the capability to track an object using a histogram of pixel values. In this example, the Hue channel pixels are extracted from the nose region of the detected face. These pixels are used to initialize the histogram for the tracker. The example tracks the object over successive video frames using this histogram."

The final code;

% Read a video frame and run the detector.

videoFileReader = vision.VideoFileReader('verona.wmv');

videoFrame = step(videoFileReader);

% Create a cascade detector object.

faceDetector = vision.CascadeObjectDetector();

bbox = step(faceDetector, videoFrame);

% Draw the returned bounding box around the detected face.

videoOut = insertObjectAnnotation(videoFrame,'rectangle',bbox,'Face');

figure, imshow(videoOut), title('Detected face');

% Get the skin tone information by extracting the Hue

% from the video frame and

% convert to the HSV color space.

[hueChannel,~,~] = rgb2hsv(videoFrame);

% Display the Hue Channel data

% and draw the bounding box around the face.

figure, imshow(hueChannel), title('Hue channel data');

rectangle('Position',bbox(1,:),'LineWidth',2,'EdgeColor',[1 1 0]);

% Detect the nose within the face region.

% The nose provides a more accurate

% measure of the skin tone

% because it does not contain any background pixels.

noseDetector = vision.CascadeObjectDetector('Nose');

faceImage = imcrop(videoFrame,bbox(1,:));

noseBBox = step(noseDetector,faceImage);

% The nose bounding box is defined relative to the cropped face image.

% Adjust the nose bounding box so that it is relative to the original video

% frame.

noseBBox(1,1:2) = noseBBox(1,1:2) + bbox(1,1:2);

% Create a tracker object.

tracker = vision.HistogramBasedTracker;

% Initialize the tracker histogram

% using the Hue channel pixels from the nose.

initializeObject(tracker, hueChannel, noseBBox(1,:));

% Create a video player object for displaying video frames.

videoInfo = info(videoFileReader);

videoPlayer = vision.VideoPlayer('Position',[300 300 videoInfo.VideoSize+30]);

% Track the face over successive video frames

% until the video is finished.

while ~isDone(videoFileReader)

% Extract the next video frame

videoFrame = step(videoFileReader);

% RGB -> HSV

[hueChannel,~,~] = rgb2hsv(videoFrame);

% Track using the Hue channel data

bbox = step(tracker, hueChannel);

% Insert a bounding box around the object being tracked

videoOut = insertObjectAnnotation(videoFrame,'rectangle',bbox,'Face');

% Display the annotated video frame

% using the video player object

step(videoPlayer, videoOut);

end

% Release resources

release(videoFileReader);

release(videoPlayer);

However, when I run this, I got the following error:

Index exceeds matrix dimensions. Error in test (line 34) noseBBox(1,1:2) = noseBBox(1,1:2) + bbox(1,1:2);

The failure caused by the assumption we made when design the code: node is at the center of the face. So, unlike the initial detection of face, nose detection was just zoom-in process within the face.

I cut the beginning of the input video (verona.wmv) a bit (1 second), and made a new one: verona2.wmv

Here are the outputs with the new input video:

And the tracking video. This one was successful, at least for couple of seconds, however, it lost track and stays that way to the end. It was fully expected because the scene changes drastically after the initial seconds.

Odd but there is a moment in the video, our detector recognizes the whole body of a woman as a face. Probably, we used the skin tone to detect a face.

Lots of things to learn to make a smart detector!

Matlab Image and Video Processing Tutorial

- Vectors and Matrices

- m-Files (Scripts)

- For loop

- Indexing and masking

- Vectors and arrays with audio files

- Manipulating Audio I

- Manipulating Audio II

- Introduction to FFT & DFT

- Discrete Fourier Transform (DFT)

- Digital Image Processing 2 - RGB image & indexed image

- Digital Image Processing 3 - Grayscale image I

- Digital Image Processing 4 - Grayscale image II (image data type and bit-plane)

- Digital Image Processing 5 - Histogram equalization

- Digital Image Processing 6 - Image Filter (Low pass filters)

- Video Processing 1 - Object detection (tagging cars) by thresholding color

- Video Processing 2 - Face Detection and CAMShift Tracking

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization