Hadoop Tutorial III : MapReduce Word Count 2

Continued from Apache Hadoop Tutorial II with CDH - MapReduce Word Count, this tutorial will show how to use hadoop with CDH 5 cluster on EC2.

We have 4 EC2 instances, one for Name node and three for Data nodes.

To see how MapReduce works, in this tutorial, we'll use an WordCount example. WordCount is a simple application that counts the number of occurrences of each word in an input set (code source Word Count 2):

package org.myorg;

import java.io.*;

import java.util.*;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapred.*;

import org.apache.hadoop.util.*;

public class WordCount extends Configured implements Tool {

public static class Map extends MapReduceBase implements Mapper<LongWritable, Text, Text, IntWritable> {

static enum Counters { INPUT_WORDS }

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

private boolean caseSensitive = true;

private Set<String> patternsToSkip = new HashSet<String>();

private long numRecords = 0;

private String inputFile;

public void configure(JobConf job) {

caseSensitive = job.getBoolean("wordcount.case.sensitive", true);

inputFile = job.get("map.input.file");

if (job.getBoolean("wordcount.skip.patterns", false)) {

Path[] patternsFiles = new Path[0];

try {

patternsFiles = DistributedCache.getLocalCacheFiles(job);

} catch (IOException ioe) {

System.err.println("Caught exception while getting cached files: " + StringUtils.stringifyException(ioe));

}

for (Path patternsFile : patternsFiles) {

parseSkipFile(patternsFile);

}

}

}

private void parseSkipFile(Path patternsFile) {

try {

BufferedReader fis = new BufferedReader(new FileReader(patternsFile.toString()));

String pattern = null;

while ((pattern = fis.readLine()) != null) {

patternsToSkip.add(pattern);

}

} catch (IOException ioe) {

System.err.println("Caught exception while parsing the cached file '" + patternsFile + "' : " + StringUtils.stringifyException(ioe));

}

}

public void map(LongWritable key, Text value, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException {

String line = (caseSensitive) ? value.toString() : value.toString().toLowerCase();

for (String pattern : patternsToSkip) {

line = line.replaceAll(pattern, "");

}

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

output.collect(word, one);

reporter.incrCounter(Counters.INPUT_WORDS, 1);

}

if ((++numRecords % 100) == 0) {

reporter.setStatus("Finished processing " + numRecords + " records " + "from the input file: " + inputFile);

}

}

}

public static class Reduce extends MapReduceBase implements Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterator<IntWritable> values, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException {

int sum = 0;

while (values.hasNext()) {

sum += values.next().get();

}

output.collect(key, new IntWritable(sum));

}

}

public int run(String[] args) throws Exception {

JobConf conf = new JobConf(getConf(), WordCount.class);

conf.setJobName("wordcount");

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setMapperClass(Map.class);

conf.setCombinerClass(Reduce.class);

conf.setReducerClass(Reduce.class);

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

List<String> other_args = new ArrayList<String>();

for (int i=0; i < args.length; ++i) {

if ("-skip".equals(args[i])) {

DistributedCache.addCacheFile(new Path(args[++i]).toUri(), conf);

conf.setBoolean("wordcount.skip.patterns", true);

} else {

other_args.add(args[i]);

}

}

FileInputFormat.setInputPaths(conf, new Path(other_args.get(0)));

FileOutputFormat.setOutputPath(conf, new Path(other_args.get(1)));

JobClient.runJob(conf);

return 0;

}

public static void main(String[] args) throws Exception {

int res = ToolRunner.run(new Configuration(), new WordCount(), args);

System.exit(res);

}

}

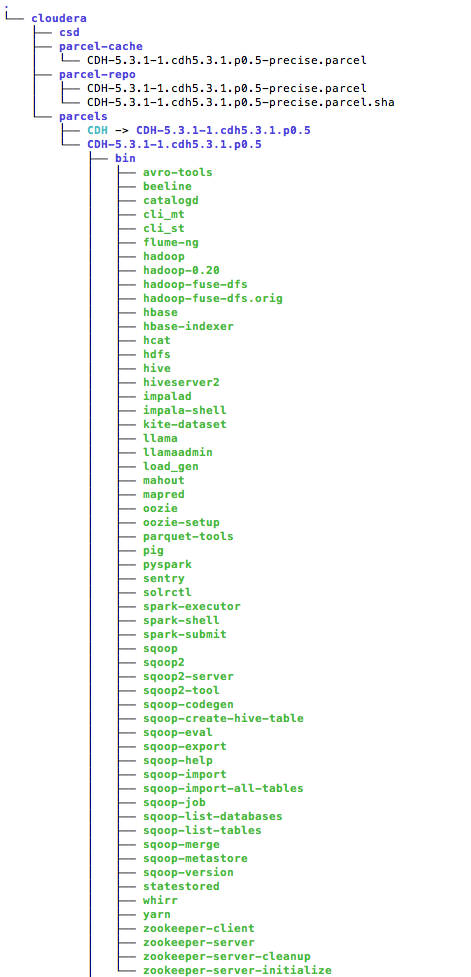

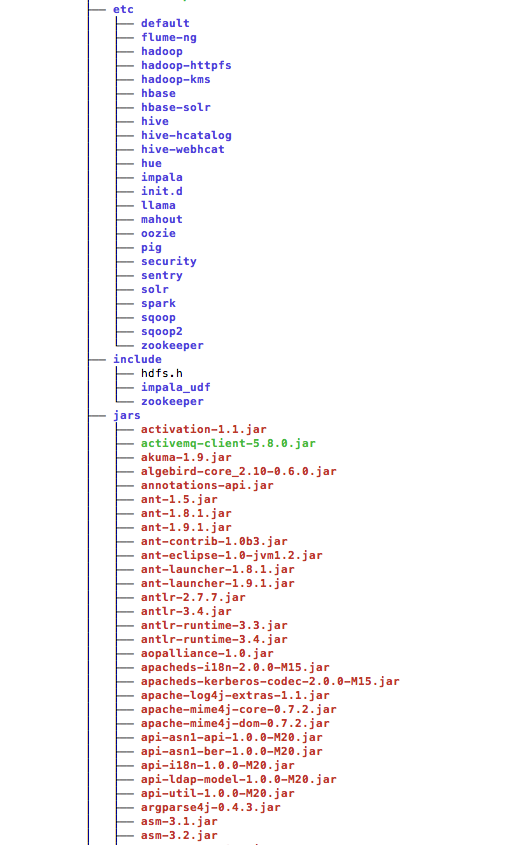

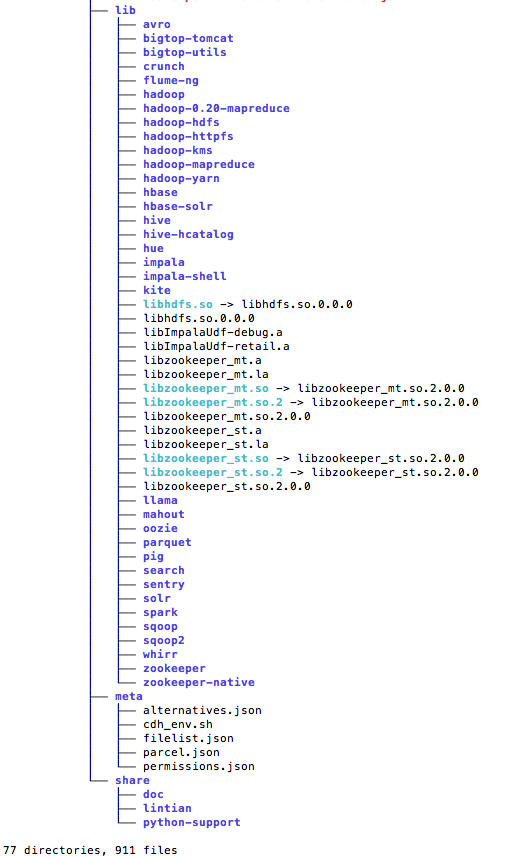

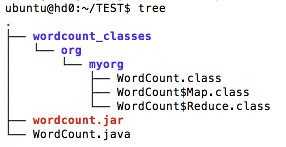

Here is the hadoop directory structure that Cloudera Manager constructed:

...

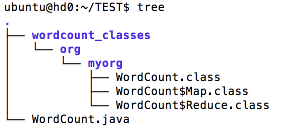

ubuntu@hd0:~/TEST$ mkdir wordcount_classes

Compile:

ubuntu@hd0:~/TEST$ javac -cp /opt/cloudera/parcels/CDH/lib/hadoop/*:/opt/cloudera/parcels/CDH/lib/hadoop/client-0.20/* -d wordcount_classes WordCount.java

ubuntu@hd0:~/TEST$ jar -cvf wordcount.jar -C wordcount_classes/ . added manifest adding: org/(in = 0) (out= 0)(stored 0%) adding: org/myorg/(in = 0) (out= 0)(stored 0%) adding: org/myorg/WordCount$Reduce.class(in = 1611) (out= 650)(deflated 59%) adding: org/myorg/WordCount.class(in = 1546) (out= 748)(deflated 51%) adding: org/myorg/WordCount$Map.class(in = 1938) (out= 798)(deflated 58%)

Create the input directory /user/cloudera/wordcount/input in HDFS:

hdfs@hd0:/home/ubuntu/TEST$ sudo su hdfs hdfs@hd0:/home/ubuntu/TEST$ hadoop fs -mkdir /user/cloudera hdfs@hd0:/home/ubuntu/TEST$ hadoop fs -chown hdfs /user/cloudera hdfs@hd0:/home/ubuntu/TEST$ hadoop fs -ls /user Found 4 items drwxr-xr-x - hdfs supergroup 0 2015-02-15 00:19 /user/cloudera drwxrwxrwx - mapred hadoop 0 2015-02-14 22:28 /user/history drwxrwxr-t - hive hive 0 2015-02-14 21:31 /user/hive drwxrwxr-x - oozie oozie 0 2015-02-14 21:40 /user/oozie hdfs@hd0:/home/ubuntu/TEST$ hadoop fs -mkdir /user/cloudera/wordcount /user/cloudera/wordcount/input hdfs@hd0:/home/ubuntu/TEST$ hdfs dfs -ls -R /user/cloudera drwxr-xr-x - hdfs supergroup 0 2015-02-15 00:31 /user/cloudera/wordcount drwxr-xr-x - hdfs supergroup 0 2015-02-15 00:31 /user/cloudera/wordcount/input

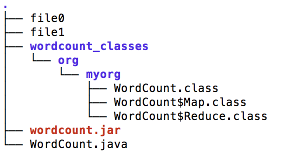

Create sample text files as input and move to the input directory:

ubuntu@hd0:~/TEST$ whoami ubuntu ubuntu@hd0:~/TEST$ echo "Hello World Bye World" > file0 ubuntu@hd0:~/TEST$ echo "Hello Hadoop Goodbye Hadoop" > file1 ubuntu@hd0:~/TEST$ sudo su hdfs hdfs@hd0:/home/ubuntu/TEST$ hadoop fs -put file* /user/cloudera/wordcount/input hdfs@hd0:/home/ubuntu/TEST$ hdfs dfs -cat /user/cloudera/wordcount/input/file0 Hello World Bye World hdfs@hd0:/home/ubuntu/TEST$ hdfs dfs -cat /user/cloudera/wordcount/input/file1 Hello Hadoop Goodbye Hadoop

hdfs@hd0:/home/ubuntu/TEST$ ls -la total 20 drwxrwxr-x 3 ubuntu ubuntu 4096 Feb 10 17:27 . drwxr-xr-x 5 ubuntu ubuntu 4096 Feb 10 15:00 .. drwxrwxr-x 2 ubuntu ubuntu 4096 Feb 10 15:00 wordcount_classes -rw-rw-r-- 1 ubuntu ubuntu 3071 Feb 10 15:08 wordcount.jar -rw-rw-r-- 1 ubuntu ubuntu 2089 Feb 10 15:00 WordCount.java hdfs@hd0:/home/ubuntu/TEST$ whoami ubuntu hdfs@hd0:/home/ubuntu/TEST$ sudo su hdfs hdfs@hd0:/home/ubuntu/TEST$ hadoop jar wordcount.jar org.myorg.WordCount /user/cloudera/wordcount/input /user/cloudera/wordcount/output 15/02/15 03:37:09 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id 15/02/15 03:37:09 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId= 15/02/15 03:37:10 INFO jvm.JvmMetrics: Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized 15/02/15 03:37:10 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this. 15/02/15 03:37:11 INFO mapred.FileInputFormat: Total input paths to process : 2 15/02/15 03:37:11 INFO mapreduce.JobSubmitter: number of splits:2 15/02/15 03:37:11 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local1462379557_0001 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/staging/hdfs1462379557/.staging/job_local1462379557_0001/job.xml:an attempt to override final parameter: hadoop.ssl.require.client.cert; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/staging/hdfs1462379557/.staging/job_local1462379557_0001/job.xml:an attempt to override final parameter: mapreduce.job.end-notification.max.retry.interval; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/staging/hdfs1462379557/.staging/job_local1462379557_0001/job.xml:an attempt to override final parameter: hadoop.ssl.client.conf; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/staging/hdfs1462379557/.staging/job_local1462379557_0001/job.xml:an attempt to override final parameter: hadoop.ssl.keystores.factory.class; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/staging/hdfs1462379557/.staging/job_local1462379557_0001/job.xml:an attempt to override final parameter: hadoop.ssl.server.conf; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/staging/hdfs1462379557/.staging/job_local1462379557_0001/job.xml:an attempt to override final parameter: mapreduce.job.end-notification.max.attempts; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/local/localRunner/hdfs/job_local1462379557_0001/job_local1462379557_0001.xml:an attempt to override final parameter: hadoop.ssl.require.client.cert; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/local/localRunner/hdfs/job_local1462379557_0001/job_local1462379557_0001.xml:an attempt to override final parameter: mapreduce.job.end-notification.max.retry.interval; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/local/localRunner/hdfs/job_local1462379557_0001/job_local1462379557_0001.xml:an attempt to override final parameter: hadoop.ssl.client.conf; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/local/localRunner/hdfs/job_local1462379557_0001/job_local1462379557_0001.xml:an attempt to override final parameter: hadoop.ssl.keystores.factory.class; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/local/localRunner/hdfs/job_local1462379557_0001/job_local1462379557_0001.xml:an attempt to override final parameter: hadoop.ssl.server.conf; Ignoring. 15/02/15 03:37:12 WARN conf.Configuration: file:/tmp/hadoop-hdfs/mapred/local/localRunner/hdfs/job_local1462379557_0001/job_local1462379557_0001.xml:an attempt to override final parameter: mapreduce.job.end-notification.max.attempts; Ignoring. 15/02/15 03:37:12 INFO mapreduce.Job: The url to track the job: http://localhost:8080/ 15/02/15 03:37:12 INFO mapreduce.Job: Running job: job_local1462379557_0001 15/02/15 03:37:12 INFO mapred.LocalJobRunner: OutputCommitter set in config null 15/02/15 03:37:12 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapred.FileOutputCommitter 15/02/15 03:37:12 INFO mapred.LocalJobRunner: Waiting for map tasks 15/02/15 03:37:12 INFO mapred.LocalJobRunner: Starting task: attempt_local1462379557_0001_m_000000_0 15/02/15 03:37:12 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ] 15/02/15 03:37:12 INFO mapred.MapTask: Processing split: hdfs://hd0:8020/user/cloudera/wordcount/input/file1:0+28 15/02/15 03:37:13 INFO mapred.MapTask: numReduceTasks: 1 15/02/15 03:37:13 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584) 15/02/15 03:37:13 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100 15/02/15 03:37:13 INFO mapred.MapTask: soft limit at 83886080 15/02/15 03:37:13 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600 15/02/15 03:37:13 INFO mapred.MapTask: kvstart = 26214396; length = 6553600 15/02/15 03:37:13 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer 15/02/15 03:37:13 INFO mapred.LocalJobRunner: 15/02/15 03:37:13 INFO mapred.MapTask: Starting flush of map output 15/02/15 03:37:13 INFO mapred.MapTask: Spilling map output 15/02/15 03:37:13 INFO mapred.MapTask: bufstart = 0; bufend = 44; bufvoid = 104857600 15/02/15 03:37:13 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214384(104857536); length = 13/6553600 15/02/15 03:37:13 INFO mapred.MapTask: Finished spill 0 15/02/15 03:37:13 INFO mapred.Task: Task:attempt_local1462379557_0001_m_000000_0 is done. And is in the process of committing 15/02/15 03:37:13 INFO mapred.LocalJobRunner: hdfs://hd0:8020/user/cloudera/wordcount/input/file1:0+28 15/02/15 03:37:13 INFO mapred.Task: Task 'attempt_local1462379557_0001_m_000000_0' done. 15/02/15 03:37:13 INFO mapred.LocalJobRunner: Finishing task: attempt_local1462379557_0001_m_000000_0 15/02/15 03:37:13 INFO mapred.LocalJobRunner: Starting task: attempt_local1462379557_0001_m_000001_0 15/02/15 03:37:13 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ] 15/02/15 03:37:13 INFO mapred.MapTask: Processing split: hdfs://hd0:8020/user/cloudera/wordcount/input/file0:0+22 15/02/15 03:37:13 INFO mapred.MapTask: numReduceTasks: 1 15/02/15 03:37:13 INFO mapreduce.Job: Job job_local1462379557_0001 running in uber mode : false 15/02/15 03:37:13 INFO mapreduce.Job: map 100% reduce 0% 15/02/15 03:37:13 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584) 15/02/15 03:37:13 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100 15/02/15 03:37:13 INFO mapred.MapTask: soft limit at 83886080 15/02/15 03:37:13 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600 15/02/15 03:37:13 INFO mapred.MapTask: kvstart = 26214396; length = 6553600 15/02/15 03:37:13 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer 15/02/15 03:37:13 INFO mapred.LocalJobRunner: 15/02/15 03:37:13 INFO mapred.MapTask: Starting flush of map output 15/02/15 03:37:13 INFO mapred.MapTask: Spilling map output 15/02/15 03:37:13 INFO mapred.MapTask: bufstart = 0; bufend = 38; bufvoid = 104857600 15/02/15 03:37:13 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214384(104857536); length = 13/6553600 15/02/15 03:37:13 INFO mapred.MapTask: Finished spill 0 15/02/15 03:37:13 INFO mapred.Task: Task:attempt_local1462379557_0001_m_000001_0 is done. And is in the process of committing 15/02/15 03:37:13 INFO mapred.LocalJobRunner: hdfs://hd0:8020/user/cloudera/wordcount/input/file0:0+22 15/02/15 03:37:13 INFO mapred.Task: Task 'attempt_local1462379557_0001_m_000001_0' done. 15/02/15 03:37:13 INFO mapred.LocalJobRunner: Finishing task: attempt_local1462379557_0001_m_000001_0 15/02/15 03:37:13 INFO mapred.LocalJobRunner: map task executor complete. 15/02/15 03:37:13 INFO mapred.LocalJobRunner: Waiting for reduce tasks 15/02/15 03:37:13 INFO mapred.LocalJobRunner: Starting task: attempt_local1462379557_0001_r_000000_0 15/02/15 03:37:13 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ] 15/02/15 03:37:13 INFO mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@2aa9233d 15/02/15 03:37:14 INFO reduce.MergeManagerImpl: MergerManager: memoryLimit=181665792, maxSingleShuffleLimit=45416448, mergeThreshold=119899424, ioSortFactor=10, memToMemMergeOutputsThreshold=10 15/02/15 03:37:14 INFO reduce.EventFetcher: attempt_local1462379557_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events 15/02/15 03:37:14 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local1462379557_0001_m_000000_0 decomp: 41 len: 45 to MEMORY 15/02/15 03:37:14 INFO reduce.InMemoryMapOutput: Read 41 bytes from map-output for attempt_local1462379557_0001_m_000000_0 15/02/15 03:37:14 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 41, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->41 15/02/15 03:37:14 WARN io.ReadaheadPool: Failed readahead on ifile EBADF: Bad file descriptor at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posix_fadvise(Native Method) at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posixFadviseIfPossible(NativeIO.java:265) at org.apache.hadoop.io.nativeio.NativeIO$POSIX$CacheManipulator.posixFadviseIfPossible(NativeIO.java:144) at org.apache.hadoop.io.ReadaheadPool$ReadaheadRequestImpl.run(ReadaheadPool.java:206) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745) 15/02/15 03:37:14 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local1462379557_0001_m_000001_0 decomp: 36 len: 40 to MEMORY 15/02/15 03:37:14 INFO reduce.InMemoryMapOutput: Read 36 bytes from map-output for attempt_local1462379557_0001_m_000001_0 15/02/15 03:37:14 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 36, inMemoryMapOutputs.size() -> 2, commitMemory -> 41, usedMemory ->77 15/02/15 03:37:14 WARN io.ReadaheadPool: Failed readahead on ifile EBADF: Bad file descriptor at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posix_fadvise(Native Method) at org.apache.hadoop.io.nativeio.NativeIO$POSIX.posixFadviseIfPossible(NativeIO.java:265) at org.apache.hadoop.io.nativeio.NativeIO$POSIX$CacheManipulator.posixFadviseIfPossible(NativeIO.java:144) at org.apache.hadoop.io.ReadaheadPool$ReadaheadRequestImpl.run(ReadaheadPool.java:206) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745) 15/02/15 03:37:14 INFO reduce.EventFetcher: EventFetcher is interrupted.. Returning 15/02/15 03:37:14 INFO mapred.LocalJobRunner: 2 / 2 copied. 15/02/15 03:37:14 INFO reduce.MergeManagerImpl: finalMerge called with 2 in-memory map-outputs and 0 on-disk map-outputs 15/02/15 03:37:14 INFO mapred.Merger: Merging 2 sorted segments 15/02/15 03:37:14 INFO mapred.Merger: Down to the last merge-pass, with 2 segments left of total size: 61 bytes 15/02/15 03:37:14 INFO reduce.MergeManagerImpl: Merged 2 segments, 77 bytes to disk to satisfy reduce memory limit 15/02/15 03:37:14 INFO reduce.MergeManagerImpl: Merging 1 files, 79 bytes from disk 15/02/15 03:37:14 INFO reduce.MergeManagerImpl: Merging 0 segments, 0 bytes from memory into reduce 15/02/15 03:37:14 INFO mapred.Merger: Merging 1 sorted segments 15/02/15 03:37:14 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 69 bytes 15/02/15 03:37:14 INFO mapred.LocalJobRunner: 2 / 2 copied. 15/02/15 03:37:14 INFO mapred.Task: Task:attempt_local1462379557_0001_r_000000_0 is done. And is in the process of committing 15/02/15 03:37:14 INFO mapred.LocalJobRunner: 2 / 2 copied. 15/02/15 03:37:14 INFO mapred.Task: Task attempt_local1462379557_0001_r_000000_0 is allowed to commit now 15/02/15 03:37:14 INFO output.FileOutputCommitter: Saved output of task 'attempt_local1462379557_0001_r_000000_0' to hdfs://hd0:8020/user/cloudera/wordcount/output/_temporary/0/task_local1462379557_0001_r_000000 15/02/15 03:37:14 INFO mapred.LocalJobRunner: reduce > reduce 15/02/15 03:37:14 INFO mapred.Task: Task 'attempt_local1462379557_0001_r_000000_0' done. 15/02/15 03:37:14 INFO mapred.LocalJobRunner: Finishing task: attempt_local1462379557_0001_r_000000_0 15/02/15 03:37:14 INFO mapred.LocalJobRunner: reduce task executor complete. 15/02/15 03:37:15 INFO mapreduce.Job: map 100% reduce 100% 15/02/15 03:37:15 INFO mapreduce.Job: Job job_local1462379557_0001 completed successfully 15/02/15 03:37:15 INFO mapreduce.Job: Counters: 38 File System Counters FILE: Number of bytes read=11004 FILE: Number of bytes written=768556 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=128 HDFS: Number of bytes written=41 HDFS: Number of read operations=25 HDFS: Number of large read operations=0 HDFS: Number of write operations=5 Map-Reduce Framework Map input records=2 Map output records=8 Map output bytes=82 Map output materialized bytes=85 Input split bytes=206 Combine input records=8 Combine output records=6 Reduce input groups=5 Reduce shuffle bytes=85 Reduce input records=6 Reduce output records=5 Spilled Records=12 Shuffled Maps =2 Failed Shuffles=0 Merged Map outputs=2 GC time elapsed (ms)=196 CPU time spent (ms)=0 Physical memory (bytes) snapshot=0 Virtual memory (bytes) snapshot=0 Total committed heap usage (bytes)=778567680 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=50 File Output Format Counters Bytes Written=41

Examine the output:

hdfs@hd0:/home/ubuntu/TEST$ hdfs dfs -ls -R /user/cloudera/wordcount drwxr-xr-x - hdfs supergroup 0 2015-02-15 00:46 /user/cloudera/wordcount/input -rw-r--r-- 3 hdfs supergroup 22 2015-02-15 00:46 /user/cloudera/wordcount/input/file0 -rw-r--r-- 3 hdfs supergroup 28 2015-02-15 00:46 /user/cloudera/wordcount/input/file1 drwxr-xr-x - hdfs supergroup 0 2015-02-15 03:37 /user/cloudera/wordcount/output -rw-r--r-- 3 hdfs supergroup 0 2015-02-15 03:37 /user/cloudera/wordcount/output/_SUCCESS -rw-r--r-- 3 hdfs supergroup 41 2015-02-15 03:37 /user/cloudera/wordcount/output/part-00000 hdfs@hd0:/home/ubuntu/TEST$ hdfs dfs -cat /user/cloudera/wordcount/output/part-00000 Bye 1 Goodbye 1 Hadoop 2 Hello 2 World 2

Big Data & Hadoop Tutorials

Hadoop 2.6 - Installing on Ubuntu 14.04 (Single-Node Cluster)

Hadoop 2.6.5 - Installing on Ubuntu 16.04 (Single-Node Cluster)

Hadoop - Running MapReduce Job

Hadoop - Ecosystem

CDH5.3 Install on four EC2 instances (1 Name node and 3 Datanodes) using Cloudera Manager 5

CDH5 APIs

QuickStart VMs for CDH 5.3

QuickStart VMs for CDH 5.3 II - Testing with wordcount

QuickStart VMs for CDH 5.3 II - Hive DB query

Scheduled start and stop CDH services

CDH 5.8 Install with QuickStarts Docker

Zookeeper & Kafka Install

Zookeeper & Kafka - single node single broker

Zookeeper & Kafka - Single node and multiple brokers

OLTP vs OLAP

Apache Hadoop Tutorial I with CDH - Overview

Apache Hadoop Tutorial II with CDH - MapReduce Word Count

Apache Hadoop Tutorial III with CDH - MapReduce Word Count 2

Apache Hadoop (CDH 5) Hive Introduction

CDH5 - Hive Upgrade to 1.3 to from 1.2

Apache Hive 2.1.0 install on Ubuntu 16.04

Apache Hadoop : HBase in Pseudo-Distributed mode

Apache Hadoop : Creating HBase table with HBase shell and HUE

Apache Hadoop : Hue 3.11 install on Ubuntu 16.04

Apache Hadoop : Creating HBase table with Java API

Apache HBase : Map, Persistent, Sparse, Sorted, Distributed and Multidimensional

Apache Hadoop - Flume with CDH5: a single-node Flume deployment (telnet example)

Apache Hadoop (CDH 5) Flume with VirtualBox : syslog example via NettyAvroRpcClient

List of Apache Hadoop hdfs commands

Apache Hadoop : Creating Wordcount Java Project with Eclipse Part 1

Apache Hadoop : Creating Wordcount Java Project with Eclipse Part 2

Apache Hadoop : Creating Card Java Project with Eclipse using Cloudera VM UnoExample for CDH5 - local run

Apache Hadoop : Creating Wordcount Maven Project with Eclipse

Wordcount MapReduce with Oozie workflow with Hue browser - CDH 5.3 Hadoop cluster using VirtualBox and QuickStart VM

Spark 1.2 using VirtualBox and QuickStart VM - wordcount

Spark Programming Model : Resilient Distributed Dataset (RDD) with CDH

Apache Spark 1.2 with PySpark (Spark Python API) Wordcount using CDH5

Apache Spark 1.2 Streaming

Apache Spark 2.0.2 with PySpark (Spark Python API) Shell

Apache Spark 2.0.2 tutorial with PySpark : RDD

Apache Spark 2.0.0 tutorial with PySpark : Analyzing Neuroimaging Data with Thunder

Apache Spark Streaming with Kafka and Cassandra

Apache Drill with ZooKeeper - Install on Ubuntu 16.04

Apache Drill - Query File System, JSON, and Parquet

Apache Drill - HBase query

Apache Drill - Hive query

Apache Drill - MongoDB query

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization