Terraform Tutorial - Modules

- Module:

A Terraform module is a set of Terraform configuration files in a single directory. Even a simple configuration consisting of a single directory with one or more .tf files is a module. So in this sense, every Terraform configuration is part of a module. - Root module:

When we run Terraform commands directly from a directory, it is considered the root module. - Child module:

A module that is called by another configuration is referred to as a child module. - Calling module:

Terraform commands will only directly use the configuration files in one directory, which is usually the current working directory. However, our configuration can use module blocks to call modules in other directories. When Terraform encounters a module block, it loads and processes that module's configuration files. - source argument:

When calling a module, the source argument is required. Terraform may search for a module in the Terraform registry that matches the given string. We could also use a URL or local file path for the source of our modules. - passing variables to a module:

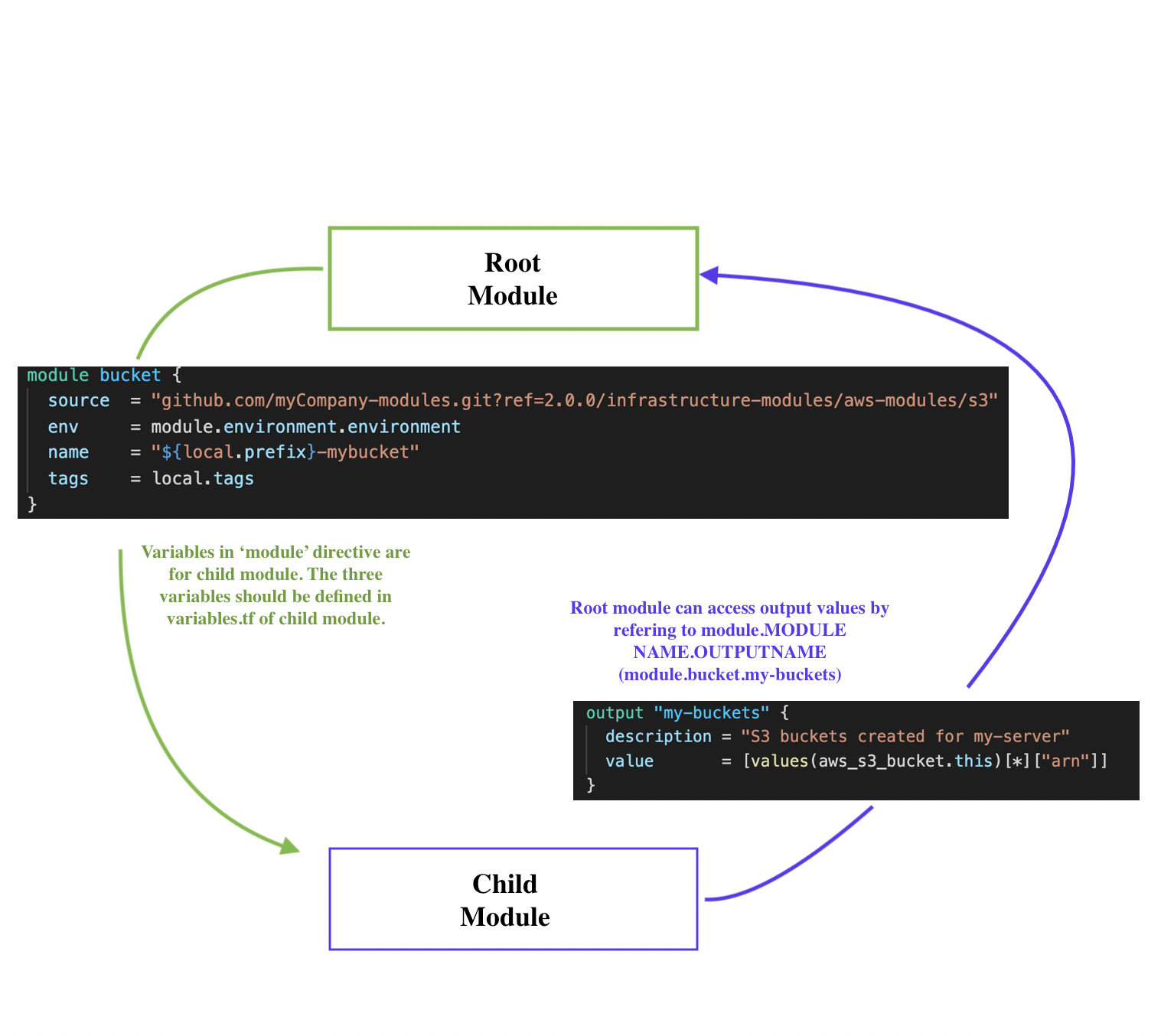

When calling a module, we may want to pass variables to the module. This is usually done in the module directive block. terraform init:

When using a new module for the first time, we must run "terraform init" to install the module. When the command is run, Terraform will install any new modules in the .terraform/modules directory within our configuration's working directory. For local modules, Terraform will create a symlink to the module's directory.$ terraform init Initializing modules... Initializing the backend... Initializing provider plugins...

Then, our .terraform/modules directory will look something like this:

.terraform/modules ├── ec2_instances ├── modules.json └── vpc

where our root main.f looks like this:

... module "vpc" { source = "terraform-aws-modules/vpc/aws" ... } module "ec2_instances" { source = "terraform-aws-modules/ec2-instance/aws" ... }- terraform.tfstate/terraform.tfstate.backup:

These files contain our Terraform state, and are how Terraform keeps track of the relationship between our configuration and the infrastructure provisioned by it - .terraform:

This directory contains the modules and plugins used to provision our infrastructure. - provider block:

We don't need a provider block in module configuration. When Terraform processes a module block, it will inherit the provider from the enclosing configuration. Because of this, including provider blocks in modules is not recommended. - module outputs:

Which values to add as outputs?

Because outputs are the only supported way for users to get information about resources configured by the module. We need to add outputs to our module in the outputs.tf file inside the module directory.

A module's outputs can be accessed as read-only attributes on the module object, which is available within the configuration that calls the module. We can reference these outputs in expressions as module.<MODULE NAME>.<OUTPUT NAME>.

In this post, we're going to go over how to use Modules to organize Terraform-managed infrastructure.

In addition to that we'll learn how a child module exposes resource to a parent module via terraform output.

We're using Terraform 12:

$ terraform -v Terraform v0.12.28 + provider.aws v3.34.0

Up to this point, we've been configuring Terraform by editing Terraform configurations directly. As our infrastructure grows, this practice has a few key problems: a lack of organization, a lack of reusability, and difficulties in management for teams.

Modules are used to create reusable components, improve organization, and to treat pieces of infrastructure as a black box.

Ref - https://www.terraform.io/intro/getting-started/modules.html

Here are the files we'll use in this post:

├── main.tf ├── my_modules │ └── instance │ ├── main.tf │ ├── output.tf │ └── variables.tf ├── output.tf └── variables.tf

Basically, the main.tf file will be using another terraform file in my-modules/instance/main.f. For each of the main.tf has its own variables defined in variables.tf. Here are the files:

./main.tf:

provider "aws" {

region = var.aws_region

}

module "my_instance_module" {

source = "./my_modules/instance"

ami = "ami-04169656fea786776"

instance_type = "t2.nano"

instance_name = "myvm01"

}

Note that we are passing variables (ami, instance_type, instance_name) to the module in the module directive where the source location is specified. Be sure that the variables are defined in variables.tf in both (root/module).

./variables.tf:

variable "aws_region" {

description = "AWS region"

type = string

default = "us-east-1"

}

./output.tf:

output "instance_ip_addr" {

value = module.my_instance_module.instance_ip_addr

description = "The public IP address of the main instance."

}

Note that we're accessing output values by referring to module.MODULE NAME.OUTPUT NAME.

The files in the module are the following.

./my_modules/instance/main.tf:

resource "aws_instance" "my_instance" {

ami = var.ami

instance_type = var.instance_type

key_name = var.key_name

tags = {

Name = var.instance_name

}

}

./my_modules/instance/variables.tf:

variable "ami" {

type = string

default = "ami-04169656fea786776"

}

variable "instance_type" {

type = string

default = "t2-nano"

}

variable "instance_name" {

description = "Value of the Name tag for the EC2 instance"

type = string

default = "ExampleInstance"

}

variable "key_name" {

type = string

default = "einsteinish"

}

./my_modules/instance/output.tf:

output "instance_ip_addr" {

value = aws_instance.my_instance.*.public_ip

description = "The public IP address of the main instance."

}

Now that we are ready, just run terraform commands within the project root directory where the top-level main.tf is located:

$ terraform init

$ terraform validate

Success! The configuration is valid.

$ terraform plan

...

Plan: 1 to add, 0 to change, 0 to destroy.

...

$ terraform apply

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# module.my_instance_module.aws_instance.my_instance will be created

+ resource "aws_instance" "my_instance" {

+ ami = "ami-04169656fea786776"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ id = (known after apply)

+ instance_state = (known after apply)

+ instance_type = "t2.nano"

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = "einsteinish"

+ outpost_arn = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ subnet_id = (known after apply)

+ tags = {

+ "Name" = "my_instance_001"

}

+ tenancy = (known after apply)

+ vpc_security_group_ids = (known after apply)

+ ebs_block_device {

+ delete_on_termination = (known after apply)

+ device_name = (known after apply)

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ snapshot_id = (known after apply)

+ tags = (known after apply)

+ throughput = (known after apply)

+ volume_id = (known after apply)

+ volume_size = (known after apply)

+ volume_type = (known after apply)

}

+ enclave_options {

+ enabled = (known after apply)

}

+ ephemeral_block_device {

+ device_name = (known after apply)

+ no_device = (known after apply)

+ virtual_name = (known after apply)

}

+ metadata_options {

+ http_endpoint = (known after apply)

+ http_put_response_hop_limit = (known after apply)

+ http_tokens = (known after apply)

}

+ network_interface {

+ delete_on_termination = (known after apply)

+ device_index = (known after apply)

+ network_interface_id = (known after apply)

}

+ root_block_device {

+ delete_on_termination = (known after apply)

+ device_name = (known after apply)

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ tags = (known after apply)

+ throughput = (known after apply)

+ volume_id = (known after apply)

+ volume_size = (known after apply)

+ volume_type = (known after apply)

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

module.my_instance_module.aws_instance.my_instance: Creating...

module.my_instance_module.aws_instance.my_instance: Still creating... [10s elapsed]

module.my_instance_module.aws_instance.my_instance: Still creating... [20s elapsed]

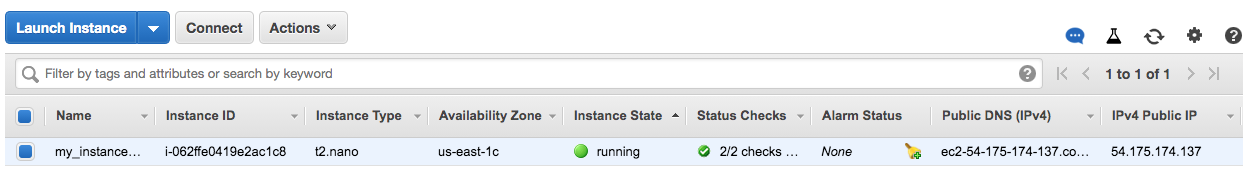

module.my_instance_module.aws_instance.my_instance: Creation complete after 26s [id=i-062ffe0419e2ac1c8]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Outputs:

instance_ip_addr = [

"54.175.174.137",

]

Check the instance from AWS console:

We can confirm it from AWS cli:

$ aws ec2 describe-instances

{

"Reservations": [

{

"Groups": [],

"Instances": [

{

"AmiLaunchIndex": 0,

"ImageId": "ami-04169656fea786776",

"InstanceId": "i-062ffe0419e2ac1c8",

"InstanceType": "t2.nano",

"KeyName": "einsteinish",

"LaunchTime": "2021-03-31T04:20:47+00:00",

"Monitoring": {

"State": "disabled"

},

"Placement": {

"AvailabilityZone": "us-east-1c",

"GroupName": "",

"Tenancy": "default"

},

"PrivateDnsName": "ip-172-31-41-218.ec2.internal",

"PrivateIpAddress": "172.31.41.218",

"ProductCodes": [],

"PublicDnsName": "ec2-54-175-174-137.compute-1.amazonaws.com",

"PublicIpAddress": "54.175.174.137",

"State": {

"Code": 16,

"Name": "running"

},

"StateTransitionReason": "",

"SubnetId": "subnet-66d25311",

"VpcId": "vpc-4c600529",

"Architecture": "x86_64",

...

ssh into the instance:

$ ssh -i ~/.ssh/einsteinish.pem ubuntu@54.175.174.137

The authenticity of host '54.175.174.137 (54.175.174.137)' can't be established.

ECDSA key fingerprint is SHA256:DZ7zjNX1Ab6cZt/PpCzCYH4dLiNKNGB/To64jNkDyLM.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '54.175.174.137' (ECDSA) to the list of known hosts.

Welcome to Ubuntu 16.04.5 LTS (GNU/Linux 4.4.0-1065-aws x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

Get cloud support with Ubuntu Advantage Cloud Guest:

http://www.ubuntu.com/business/services/cloud

0 packages can be updated.

0 updates are security updates.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

To run a command as administrator (user "root"), use "sudo ".

See "man sudo_root" for details.

ubuntu@ip-172-31-41-218:~$

Clean up:

$ terraform destroy ... Plan: 0 to add, 0 to change, 1 to destroy. Do you really want to destroy all resources? Terraform will destroy all your managed infrastructure, as shown above. There is no undo. Only 'yes' will be accepted to confirm. Enter a value: yes module.my_instance_module.aws_instance.my_instance: Destroying... [id=i-062ffe0419e2ac1c8] module.my_instance_module.aws_instance.my_instance: Still destroying... [id=i-062ffe0419e2ac1c8, 10s elapsed] module.my_instance_module.aws_instance.my_instance: Still destroying... [id=i-062ffe0419e2ac1c8, 20s elapsed] module.my_instance_module.aws_instance.my_instance: Still destroying... [id=i-062ffe0419e2ac1c8, 30s elapsed] module.my_instance_module.aws_instance.my_instance: Destruction complete after 32s Destroy complete! Resources: 1 destroyed.

In this post, the terraform outputs were put intentionally

to demonstrate how the child module exposes its resource to a parent module.

Let's look into how our module is defined in ./main.tf:

module "my_instance_module" {

source = "./my_modules/instance"

ami = "ami-04169656fea786776"

instance_type = "t2.nano"

instance_name = "myvm01"

}

It named as my_instance_module.

The public ip address is defined in that module, ./my_modules/instance/output.tf:

output "instance_ip_addr" {

value = aws_instance.my_instance.*.public_ip

description = "The public IP address of the main instance."

}

Here we're defining an output value named instance_ip_addr containing the IP address of an EC2 instance that our module created.

How can we access the resource (public_ip) from the root output.tf?

Now any module that references our module can use this value in expressions as module.module_name.output_name, in our case, module.my_instance_module.instance_ip_addr, where my_instance_module is the name we've used in the corresponding module declaration.

References:

In this section, we will create a local submodule within our existing configuration that uses the s3 bucket resource from the AWS provider:

├── LICENSE ├── README.md ├── main.tf ├── modules │ └── aws-s3-static-website-bucket │ ├── LICENSE │ ├── README.md │ ├── main.tf │ ├── outputs.tf │ ├── variables.tf │ └── www │ ├── error.html │ └── index.html ├── outputs.tf └── variables.tf

Here are the files.

main.tf:

# Terraform configuration

provider "aws" {

region = "us-east-1"

}

module "website_s3_bucket" {

source = "./modules/aws-s3-static-website-bucket"

bucket_name = "bogo-test-april-19-2021"

tags = {

Terraform = "true"

Environment = "dev"

}

}

outputs.tf:

output "website_bucket_arn" {

description = "ARN of the bucket"

value = module.website_s3_bucket.arn

}

output "website_bucket_name" {

description = "Name (id) of the bucket"

value = module.website_s3_bucket.name

}

output "website_bucket_domain" {

description = "Domain name of the bucket"

value = module.website_s3_bucket.domain

}

modules/aws-s3-static-website-bucket/main.tf:

resource "aws_s3_bucket" "s3_bucket" {

bucket = var.bucket_name

acl = "public-read"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::${var.bucket_name}/*"

]

}

]

}

EOF

website {

index_document = "index.html"

error_document = "error.html"

}

tags = var.tags

}

modules/aws-s3-static-website-bucket/outputs.tf:

# Output variable definitions

output "arn" {

description = "ARN of the bucket"

value = aws_s3_bucket.s3_bucket.arn

}

output "name" {

description = "Name (id) of the bucket"

value = aws_s3_bucket.s3_bucket.id

}

output "domain" {

description = "Domain name of the bucket"

value = aws_s3_bucket.s3_bucket.website_domain

}

modules/aws-s3-static-website-bucket/variables.tf:

# Input variable definitions

variable "bucket_name" {

description = "Name of the s3 bucket. Must be unique."

type = string

}

variable "tags" {

description = "Tags to set on the bucket."

type = map(string)

default = {}

}

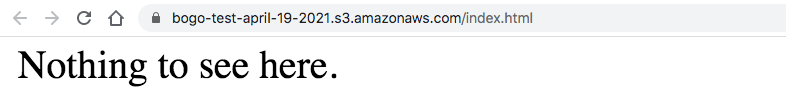

Next, run terraform apply and then upload the files in www/ folder to S3:

$ aws s3 cp modules/aws-s3-static-website-bucket/www/ s3://$(terraform output -raw website_bucket_name)/ --recursive

Then, we can access the app via https://bogo-test-april-19-2021.s3.amazonaws.com/index.html:

References:

The Terraform Registry includes a directory of ready-to-use modules for various common purposes, which can serve as larger building-blocks for our infrastructure.

Here are almost identical code as in the previous section. This new code is using modules from the Terraform registry.

├── LICENSE ├── README.md ├── main.tf ├── modules │ └── aws-s3-static-website-bucket │ ├── LICENSE │ ├── README.md │ ├── main.tf │ ├── outputs.tf │ ├── variables.tf │ └── www │ ├── error.html │ └── index.html ├── outputs.tf └── variables.tf

Here are the files.

main.tf:

# Terraform configuration

provider "aws" {

region = "us-east-1"

}

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "2.21.0"

name = var.vpc_name

cidr = var.vpc_cidr

azs = var.vpc_azs

private_subnets = var.vpc_private_subnets

public_subnets = var.vpc_public_subnets

enable_nat_gateway = var.vpc_enable_nat_gateway

tags = var.vpc_tags

}

module "ec2_instances" {

source = "terraform-aws-modules/ec2-instance/aws"

version = "2.12.0"

name = "my-ec2-cluster"

instance_count = 2

ami = "ami-0c5204531f799e0c6"

instance_type = "t2.micro"

vpc_security_group_ids = [module.vpc.default_security_group_id]

subnet_id = module.vpc.public_subnets[0]

tags = {

Terraform = "true"

Environment = "dev"

}

}

module "website_s3_bucket" {

source = "./modules/aws-s3-static-website-bucket"

bucket_name = "bogo-test-april-19-2021"

tags = {

Terraform = "true"

Environment = "dev"

}

}

outputs.tf:

# Output variable definitions

output "vpc_public_subnets" {

description = "IDs of the VPC's public subnets"

value = module.vpc.public_subnets

}

output "ec2_instance_public_ips" {

description = "Public IP addresses of EC2 instances"

value = module.ec2_instances.public_ip

}

output "website_bucket_arn" {

description = "ARN of the bucket"

value = module.website_s3_bucket.arn

}

output "website_bucket_name" {

description = "Name (id) of the bucket"

value = module.website_s3_bucket.name

}

output "website_bucket_domain" {

description = "Domain name of the bucket"

value = module.website_s3_bucket.domain

}

variables.tf:

# Input variable definitions

variable "vpc_name" {

description = "Name of VPC"

type = string

default = "example-vpc"

}

variable "vpc_cidr" {

description = "CIDR block for VPC"

type = string

default = "10.0.0.0/16"

}

variable "vpc_azs" {

description = "Availability zones for VPC"

type = list(string)

default = ["us-east-1a", "us-east-1b", "us-east-1c"]

}

variable "vpc_private_subnets" {

description = "Private subnets for VPC"

type = list(string)

default = ["10.0.1.0/24", "10.0.2.0/24"]

}

variable "vpc_public_subnets" {

description = "Public subnets for VPC"

type = list(string)

default = ["10.0.101.0/24", "10.0.102.0/24"]

}

variable "vpc_enable_nat_gateway" {

description = "Enable NAT gateway for VPC"

type = bool

default = true

}

variable "vpc_tags" {

description = "Tags to apply to resources created by VPC module"

type = map(string)

default = {

Terraform = "true"

Environment = "dev"

}

}

modules/aws-s3-static-website-bucket/main.tf:

resource "aws_s3_bucket" "s3_bucket" {

bucket = var.bucket_name

acl = "public-read"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::${var.bucket_name}/*"

]

}

]

}

EOF

website {

index_document = "index.html"

error_document = "error.html"

}

tags = var.tags

}

modules/aws-s3-static-website-bucket/outputs.tf:

# Output variable definitions

output "arn" {

description = "ARN of the bucket"

value = aws_s3_bucket.s3_bucket.arn

}

output "name" {

description = "Name (id) of the bucket"

value = aws_s3_bucket.s3_bucket.id

}

output "domain" {

description = "Domain name of the bucket"

value = aws_s3_bucket.s3_bucket.website_domain

}

modules/aws-s3-static-website-bucket/variables.tf:

# Input variable definitions

variable "bucket_name" {

description = "Name of the s3 bucket. Must be unique."

type = string

}

variable "tags" {

description = "Tags to set on the bucket."

type = map(string)

default = {}

}

References:

Terraform

Ph.D. / Golden Gate Ave, San Francisco / Seoul National Univ / Carnegie Mellon / UC Berkeley / DevOps / Deep Learning / Visualization